Genetic Algorithm for Image Classification: A review

Abstract

Convolutional Neural Networks (CNNs) are the most widely used deep neural network models at the moment and have been used successfully for image classification and other tasks. The CNN’s performance is determined by its architecture and hyperparameter configuration. Convolutional neural networks (CNNs) are a type of neural network architecture that is used to apply neural networks to two-dimensional arrays (typically images) based on spatially localized neural input. The performance of CNNs, on the other hand, is highly dependent on their architecture. The architectures of the majority of state-of-the-art CNNs are frequently designed manually by experts in both CNNs and the investigated problems. This paper reviews how CNN, especially in image classification using Genetic Algorithm.

Keywords: CNN, Image Classification, Genetic Algorithm

Introduction

The convolutional neural network (CNN) is one of the most widely used and popular deep learning networks [1]. CNN have outperformed most machine learning approaches in a wide range of real-world applications. Nowadays, Deep Learning is very popular as a result of CNN. The main advantage of CNN over its predecessors is that it automatically detects significant features without the need for human intervention, making it the most widely used. It is well known that the performance of CNNs is heavily dependent on their architecture [2]. To accomplish high efficiency, the architectures of state-of-the-art of CNNs such as GoogleNet [3], ResNet [4], and DenseNet [5], and AlexNet [6] are all manually designed by experts with extensive domain knowledge from both investigated data and CNNs. CNN architecture design algorithms can also spread the widespread adoption of CNNs, promoting the development of machine intelligence. For large-scale visual recognition, the deep convolutional neural network (CNN) is the state-of-the-art solution. Following some fundamental principles, such as increasing network depth and establishing highway connections, researchers manually designed and validated a large number of fixed network architectures.

Genetic algorithms (GAs), genetic programming (GP), and evolutionary strategy (ES) are examples of typical evolutionary algorithms, with GAs being the most popular due to their theoretical explanations [7] and promising performance in solving various optimization problems [8-10]. Additionally, it has been demonstrated that GAs are capable of generating high-quality optimal solutions when bio-inspired operators such as mutation, crossover, and selection are used. Genetic algorithms are a type of metaheuristic inspired by natural selection. It is frequently used to develop superior solutions to optimization and search problems. Kaustuv et al. [11] developed a new mutation operator to exploit fitness and unfitness for feature selection and classification using an ensemble method. Sun et al. [12] looked at both “automatic” and “evolutionary algorithm-based” CNN architecture designs and came up with an effective and efficient algorithm, called CNN-GA, that can automatically find the best CNN architectures. This means that the discovered CNN can be used right away without any manual tweaking. CNN-GA, as the name implies, is an algorithm for automatically designing CNN architectures.

Literature Review

Encoding the entire architecture of a CNN is a multi-stage process in Genetic CNN. Each stage begins with the encoding of a small architecture, followed by the stacking of multiple, distinct small architectures to form the entire architecture. Genetic CNN is provided with a CNN architecture framework, and the small architectures are used to replace the convolutional layers in the provided framework, resulting in the final CNN. In genetic CNN, these small architectures are referred to as cells, and each cell is designed at each stage. Multiple predefined CNN building blocks are ordered and their connections are encoded in each stage. The first and last building blocks are specified manually, while the remaining ones are all convolutional layers with the same settings. The connections between these ordered building blocks are encoded using a binary string encoding method [13].

David et al. [14] demonstrated how to improve the performance of a deep autoencoder using a GA-assisted method on the MNIST dataset. In the GA population, they treated each set of autoencoder weights as a single chromosome. They calculated the fitness score using the inverse of the root mean squared error (RMSE). After classifying all chromosomes according to their fitness score, they used backpropagation to update the weights of high-ranking chromosomes and replaced low-ranking chromosomes in the population with offspring of high- ranking chromosomes. The fitness score was used in this study solely to eliminate low-ranking chromosomes from the population. Selection was carried out uniformly and with equal probability on the remaining chromosomes. When compared to backpropagation alone, the GAassisted method consistently outperformed it in terms of reconstruction error and network sparsity.

Suganuma et al. [15] proposed using Cartesian Genetic Programming to generate the CNN structure and connectivity (CGP). To minimize the search space, CGP’s node functions were chosen to be convolutional blocks, tensor concatenation, and other high-level functional modules. After training the network with the training data in the conventional manner, they calculated the fitness score based on the accuracy of the validation dataset. They evaluated the performance of the evolved CNN models on the CIFAR10 dataset and found that CGP- CNN(ConvSet) and CGP-CNN(ResSet) had error rates of 6.34 percent and 6.05 percent, respectively. Loshchilov and Hutter [16] optimized the hyperparameters of deep neural networks using the Covariance Matrix Adaptation Evolution Strategy (CMA-ES) (DNNs). On the MNIST dataset, they compared the performance of CMA-ES and other state-of-the-art algorithms for tuning the hyperparameters of a CNN.

Liu et al. [17] have discussed in detail the advantages and disadvantages of the genetic algorithm-evolved neural network classifier. Their approach combines a real-coded GA strategy with a back propagation algorithm. The genetic operators are meticulously designed to optimize the neural network while avoiding premature convergence and permutation issues. The algorithm was validated using SPOT-4 XS imagery. Preliminary research indicates that a hybrid GA algorithm-based neural network classifier can achieve a higher overall accuracy when classifying high-resolution land cover. The experiment and analysis of the CBERS data indicate that a well-designed genetic algorithm-based neural network outperforms a gradient descent-based neural network. This is supported by an analysis of the changes in the neural network’s connection weights and biases.

Genetic Algorithm for Image Classification

Sun et al. [18] pioneered the use of ResNet and DenseNet blocks to automatically evolve CNN architectures [19]. They generated the CNN architecture using a combination of three different units: ResNet block units, DenseNet block units, and pooling layer units. Each ResNet or DenseNet unit contained multiple ResNet blocks and DenseNet blocks, which contributed to the network’s depth and increased the speed of heuristic search by altering the network’s depth. They compared their model’s performance on the CIFAR10 and CIFAR100 benchmarks to that of 18 state-of-the-art algorithms.

Sun et al [12] proposed an automatic architecture design algorithm for CNNs based on GA (dubbed CNN-GA), which is capable of discovering the optimal CNN architecture for users who lack expertise in tuning CNN architectures. They adapted the ResNet [4] concept of skipping connections to create deeper networks. Their architectures are generated automatically using a combination of skip layers and pooling layers. The goal was accomplished by developing a new encoding strategy for the GA that allows for arbitrary depths of CNNs to be encoded, incorporating skip connections to encourage the production of deeper CNNs during evolution, and developing a parallel as well as a cache component that significantly accelerates the fitness evaluation when faced with a limited computational resource. The proposed algorithm is evaluated on two difficult benchmark datasets and compared to 18 state-of-the-art peer competitors, which include eight manually designed CNNs, six automatic and manually tuned CNNs, and four automatic algorithms that discover the architectures of CNNs. The experimental results demonstrate that CNN-GA outperforms almost all manually designed CNNs as well as automatic peer competitors in terms of classification accuracy and is competitive with automatic and manually tuned peer competitors. CNNGA discovered a CNN with a significantly smaller number of parameters than the majority of peer competitors. Additionally, CNN-GA consumes significantly less computational power than the majority of its automatic and automatic+manual tuning peer competitors. CNN-GA is also fully automated, and users can use it directly to solve their own image classification problems, regardless of whether they have domain expertise in CNNs or GAs. Additionally, the CNN architecture developed by CNN-GA on CIFAR10 exhibits promising performance when applied to the ImageNet dataset.

CNN-GA includes two components that were designed to speed up the fitness evaluation while conserving computational resources. However, the computational resources required are still quite large in comparison to those used by GAs to solve traditional problems. Numerous algorithms based on evolutionary computation techniques have been developed for the purpose of solving expensive optimization problems.

GA Implementation

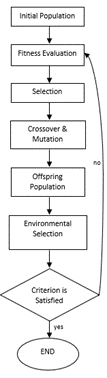

The GA evolves in the following manner:

- Initialization of the population

- Each member’s fitness is evaluated;

- Parent selection to produce offspring for the next generation

- Parent-offspring crossbreeding (i.e., “crossover”)

- The offspring’s unintentional mutation

- Constant evaluation, reproduction, and mutation

The integration of the GA and the CNN consists of the following steps:

- Randomization of the initialization values for each chromosome

- Substituting the CNN’s weights with the values of a particular chromosome

- Simulate the CNN using the newly discovered weights (this produces an output image).

- Calculating the current member’s fitness by subtracting the resultant image from the target

- Repeat the simulation for each member of the population.

- Using a roulette system to determine the next generation’s parents

- Having new children by crossing the parents’ sexes

- With a 1% chance of mutation, genetically modifying the child

- Repetition of simulation, evaluation, reproduction, and mutation procedures until the evaluation criteria are met

Fig 1The flowchart of a genetic algorithm

Oullette et al. [20] proposed a method using Genetic Algorithm (GA) to find the optimum weights for a convolutional neural network (CNN) to detect cracks on building structures. They presented 100 crack (320×240 pixel) images to 25 GA-trained CNNs in total. The GA-tuned

CNN detected cracks at a rate of 92.311.4 percent in aggregate. In comparison, the CNN trained using backpropagation had a success rate of 89.8 & 3.2 percent. The standard GA used to tune the CNN performed no better statistically than the backpropagation method. They chose not to use a more advanced GA because, while the results may become statistically significant, the crack detector will not reach human-level detection without additional intelligence added to the system. In the process of training using GA, to determine fitness of each member, subtraction of resultant image and the target image is used. To speed up the learning process, progressive training approach from small to large images is implemented. Through the experiment, it is found that while training the weight of the CNN using GA approach is simpler to im

Conclusion

According to the state-of-the-art of Genetic Algorithm for Image Classification, this review demonstrates the application of Genetic Algorithm for finding best CNN architecture in the field of computer vision. This greatly helps people who are not well aware of complex available architectures without losing much accuracy and saves time when compared to following regular trial and error method. The applications of GA can be used in many different fields where search of parameters is required to maximize fitness function.

References

- Dhillon, A., & Verma, G. K. (2020). Convolutional neural network: a review of models, methodologies and applications to object detection. Progress in Artificial Intelligence, 9(2), 85-112.

- Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image arXiv preprint arXiv:1409.1556.

- Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., … & Rabinovich, A. (2015). Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1-9).

- He, , Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770- 778).

- Huang, G., Liu, Z., Van Der Maaten, L., & Weinberger, K. Q. (2017). Densely connected convolutional In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4700-4708).

- Alom, Z., Taha, T. M., Yakopcic, C., Westberg, S., Sidike, P., Nasrin, M. S., … & Asari, K. (2018). The history began from alexnet: A comprehensive survey on deep learning approaches. arXiv preprint arXiv:1803.01164.

- Schmitt, M. (2001). Theory of genetic algorithms. Theoretical Computer Science, 259(1- 2), 1-61.

- Sun, , Yen, G. G., & Yi, Z. (2018). IGD indicator-based evolutionary algorithm for many- objective optimization problems. IEEE Transactions on Evolutionary Computation, 23(2), 173-187.

- Sun, Y., Yen, G. G., & Yi, Z. (2017). Reference line-based estimation of distribution algorithm for many-objective Knowledge-Based Systems, 132, 129-143.

- Jiang, M., Huang, Z., Qiu, L., Huang, W., & Yen, G. G. (2017). Transfer learning-based dynamic multiobjective optimization IEEE Transactions on Evolutionary Computation, 22(4), 501-514.

- Nag, K., & Pal, N. R. (2015). A multiobjective genetic programming-based ensemble for simultaneous feature selection and classification. IEEE transactions on cybernetics, 46(2), 499-510.

- Sun, Y., Xue, B., Zhang, M., Yen, G. G., & Lv, J. (2020). Automatically designing CNN architectures using the genetic algorithm for image IEEE transactions on cybernetics, 50(9), 3840-3854.

- Srinivas, , & Patnaik, L. M. (1994). Genetic algorithms: A survey. computer, 27(6), 17- 26.

- David, O. E., & Greental, I. (2014, July). Genetic algorithms for evolving deep neural In Proceedings of the Companion Publication of the 2014 Annual Conference on Genetic and Evolutionary Computation (pp. 1451-1452).

- Suganuma, , Shirakawa, S., & Nagao, T. (2017, July). A genetic programming approach to designing convolutional neural network architectures. In Proceedings of the genetic and evolutionary computation conference (pp. 497-504).

- Loshchilov, I., & Hutter, F. (2016). CMA-ES for hyperparameter optimization of deep neural arXiv preprint arXiv:1604.07269.

- Liu, Z., Liu, A., Wang, C., & Niu, Z. (2004). Evolving neural network using real coded genetic algorithm (GA) for multispectral image classification. Future Generation Computer Systems, 20(7), 1119-1129.

- Sun, , Xue, B., Zhang, M., & Yen, G. G. (2018). Automatically evolving cnn architectures based on blocks. arXiv preprint arXiv:1810.11875.

- Huang, , Liu, Z., Van Der Maaten, L., & Weinberger, K. Q. (2017). Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4700-4708).

- Oullette, R., Browne, M., & Hirasawa, K. (2004, June). Genetic algorithm optimization of a convolutional neural network for autonomous crack In Proceedings of the 2004 congress on evolutionary computation (IEEE Cat. No. 04TH8753) (Vol. 1, pp. 516-521). IEEE.

Dewa Ayu Defina A.N , Boy Sugijakko, Brillian Fieri