Pemanfaatan Model Encoder IndoBERT dan Decoder mT5 untuk Ringakasan Teks Abstraktif pada Dataset Berita berbahasa Indonesia

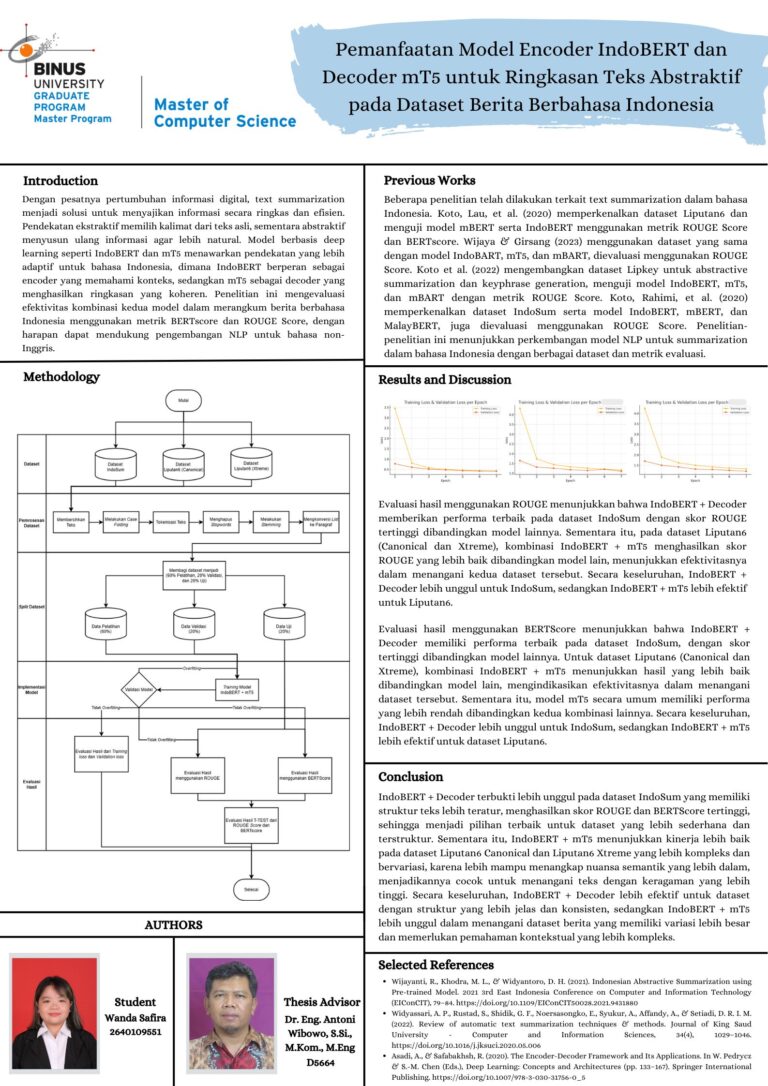

Automatic text summarization is a challenging task in Natural Language Processing (NLP), especially for low-resource languages like Indonesian. This research proposes a model utilizing IndoBERT as the encoder and mT5 as the decoder to generate abstractive text summaries for Indonesian news articles. The model is trained on IndoSum, Liputan6 Canonical, and Liputan6 Xtreme datasets, each consisting of 50,000 samples. The preprocessing steps include tokenization, stemming, and stopword removal to enhance data quality. The model is trained using the AdamW optimizer, with a batch size of 8, a learning rate of 3e-5, and 7 epochs, while applying gradient accumulation to manage GPU memory limitations. Evaluation using ROUGE and BERTScore shows that IndoBERT + mT5 outperforms other models on Liputan6 Canonical and Liputan6 Xtreme datasets, achieving ROUGE-L scores of 0.4279 and 0.3874, and BERTScore of 0.7941 and 0.7828. Meanwhile, on the IndoSum dataset, IndoBERT + Decoder achieves the highest scores with ROUGE-L of 0.7363 and BERTScore of 0.8899. The results indicate that IndoBERT effectively captures Indonesian linguistic structures, while mT5 generates more coherent and relevant summaries. This combination offers a promising approach to abstractive text summarization for Indonesian news articles.

Keywords: IndoBERT, mT5, Abstractive Text Summarization, NLP, Indonesian news

Comments :